Large Language Models (LLMs) have gained significant attention in recent years, yet understanding their internal mechanisms remains challenging. When examining individual attention heads in Transformer models, researchers have identified specific functionalities in some heads, such as induction heads that predict tokens like ‘Potter’ following ‘Harry’ when the phrase appears in context. Ablation studies confirm these heads’ causal relationship to model behaviours. However, most attention heads distribute focus across diverse contexts without clear functionality. The challenge lies in interpreting these complex attention patterns, as inter-head collaboration often occurs rather than isolated functionality. This phenomenon resembles feature superposition in neural interpretation, suggesting the existence of attention superposition in Multi-Head Self-Attention (MHSA) mechanisms. Understanding these complex interactions is crucial for developing more transparent and controllable language models.

Previous research has made significant strides in explaining individual attention head functionality using techniques like activation patching and path patching. These approaches have identified several specialised attention heads in transformer models, including composition heads, induction heads, name mover heads, number comparison heads, copy suppression heads, successor heads, and long context retrieval heads. However, the superposition hypothesis suggests that neurons relate to multiple non-orthogonal underlying features rather than single functionalities. Sparse Autoencoders have emerged as a promising method to extract overcomplete sets of sparse, linearly comprehensible features from neural networks. The success of these autoencoders demonstrates the universality of superposition across various dimensions, including model size, architecture types, and even different modalities. These methods, while valuable, still struggle to fully explain the complex interactions between attention heads and their collaborative behaviour in language models.

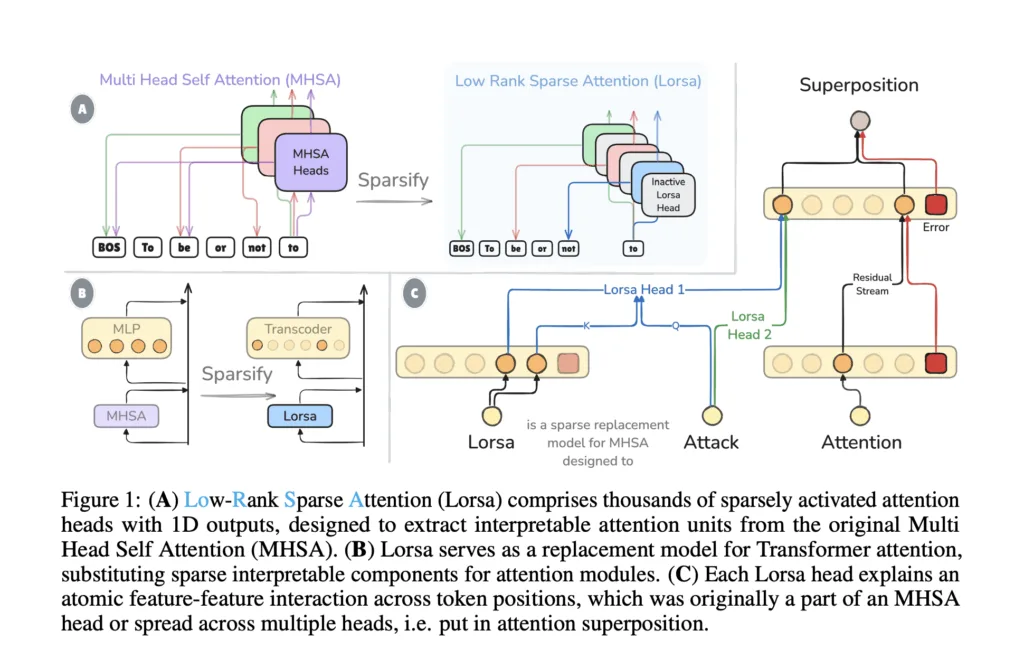

The research from the Shanghai Innovation Institute, OpenMOSS Team, School of Computer Science, Fudan University introduce Low-Rank Sparse Attention (Lorsa), a robust approach to disentangle atomic attention units from attention superposition. Lorsa replaces standard Multi-Head Self-Attention with an overcomplete set of attention heads that feature single-dimensional OV circuits and sparsity constraints. To evaluate Lorsa, researchers developed an exploration interface that provides comprehensive information on each Lorsa head, quantitatively assessing interpretability through top activations and attribution patterns. Results demonstrate that Lorsa’s monosemanticity compares favorably to Sparse Autoencoder features. The method was tested on both Pythia-160M and Llama-3.1-8B models, successfully identifying known attention mechanisms such as induction heads, name mover heads, successor heads, and attention sinks. Further analysis revealed arithmetic-specific Lorsa heads in Llama-3.1-8B and identified thematic anchor heads exhibiting long-range, topic-specific attention patterns. This approach provides unprecedented visibility into transformer attention mechanisms.

Attention superposition in Transformer models parallels how neurons represent more features than their dimensions. The research hypothesises that MHSA comprises multiple attention units in superposition, each attending between specific token pairs with interpretable read/write operations on the residual stream. This hypothesis suggests atomic attention units spread across multiple MHSA heads, while individual heads contain multiple units.

Three key pieces of evidence support attention superposition: First, polysemantic heads respond to unrelated inputs, like successor heads that increment days, numbers, and exhibit acronym/copying behaviours simultaneously. Second, most attention heads lack clear interpretation patterns, with studies showing failed interpretation attempts for over 90% of GPT-2 heads. Third, direct observations show attention output features collectively contributed by multiple heads, with approximately 25% of learned attention units spread across multiple MHSA heads.

Understanding attention superposition matters significantly for two key reasons. First, attribution-based circuit tracing becomes challenging when features compute collectively, as individual Query-Key patterns may be misled due to interference from other features within the same heads. Second, the structure of attention superposition may reveal important model biology motifs, raising questions about why certain attention units, like induction heads, are implemented by single MHSA heads while others exist in superposition.

The Lorsa architecture addresses these challenges through several innovative design elements. Lorsa is trained to predict MHSA outputs by minimising mean square error. It employs one-dimensional OV circuits that restrict read/write operations to specific residual stream features, aligning with the linear representation hypothesis. For Query and Key weights, Lorsa implements parameter sharing across every DLorsa QK head, maintaining parameter efficiency while preserving performance. This strategy makes Lorsa QK circuits similar to MHSA but with sparsity constraints on each OV dimension.

Lorsa employs orders of magnitude more heads than standard MHSA while activating only a small subset per token. For each position, Lorsa’s output aggregates only the top-K heads with the largest activation values, with the active head subset varying dynamically across token positions. This approach resembles TopK-SAEs, selecting the most salient linear components. While similar to attention Sparse Autoencoders, Lorsa differs in that its head activations derive from attention patterns of previous tokens rather than simple linear encoders with ReLU.

Lorsa’s interpretability assessment employs several key metrics to understand individual head functionality. Top activations help identify patterns by examining the 16 highest-activating tokens for each Lorsa head across 100 million samples from held-out data. The z pattern analysis decomposes activations linearly into token-wise contributions from preceding positions, revealing which previous tokens contribute to current activations. This approach parallels direct feature attribution analysis used for attention Sparse Autoencoders, but with simpler attribution involving just one one-dimensional OV circuit and a single QK circuit.

A visualisation dashboard provides comprehensive information about each Lorsa head. For example, a “you”-specific induction head shows several important patterns: it primarily reads from features indicating the current token is “you”/”your” through its weight vector, strongly activates a “say you” feature that amplifies the logit of “you,” and increases prediction probabilities for various “you” tokens. The QK attention pattern computation involves current token features at the query position and previous token features where the current token is “you,” with the previous token often being words like “with,” “thank,” or “do.” Interestingly, this particular Lorsa head is almost equally distributed between two MHSA heads (5.0 and 5.7), demonstrating how Lorsa successfully disentangles attention units that exist across multiple standard attention heads.

Results confirm Lorsa’s effectiveness in identifying known attention mechanisms across different models. Using path patching, researchers rediscovered previously documented monosemantic heads in Pythia-160M, including induction heads, name mover heads, copy suppression heads, successor heads, and attention sinks. In Llama-3.1-8B, they identified arithmetic-specific Lorsa heads that activate during simple arithmetic operations, with each head using distinct heuristics to fetch operands. In addition to this, they discovered “thematic anchor” heads that exhibit long-range attention to topically related tokens, suggesting a mechanism for maintaining persistent topic representations that bias subsequent token predictions toward domain-appropriate vocabulary and structures.

Low-Rank Sparse Attention successfully disentangles atomic attention units from attention superposition in Transformer models. The method effectively recovers known attention mechanisms while uncovering new interpretable behaviours, demonstrating its value for neural network interpretability. Despite these advances, significant challenges remain in unbinding QK circuits to achieve fully independent heads and reducing superposition effects. Future research directions include exploring low-dimensional QK structures, cross-layer superposition, and systematic Q/K/V composition.

Check out the Paper, Model on Hugging Face and GitHub Page. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.