LLMs have made significant strides in language-related tasks such as conversational AI, reasoning, and code generation. However, human communication extends beyond text, often incorporating visual elements to enhance understanding. To create a truly versatile AI, models need the ability to process and generate text and visual information simultaneously. Training such unified vision-language models from scratch using methods like autoregressive token prediction or a hybrid approach combining diffusion and language losses has shown strong performance. Still, it requires vast computational resources and retraining for each new modality. An alternative approach adapts pretrained LLMs with vision capabilities, which offers a more efficient path but often compromises the language model’s original performance.

Current research has focused on three main strategies: merging LLMs with standalone image generation models, training large multimodal models end-to-end, or using a combination of diffusion and autoregressive losses. While these methods have achieved state-of-the-art results, they either require retraining large models or result in degradation of the LLM’s core capabilities. Despite these challenges, leveraging pretrained LLMs with added vision components has demonstrated significant potential, particularly in tasks involving image understanding and generation. However, these methods still face limitations in terms of efficiency and flexibility.

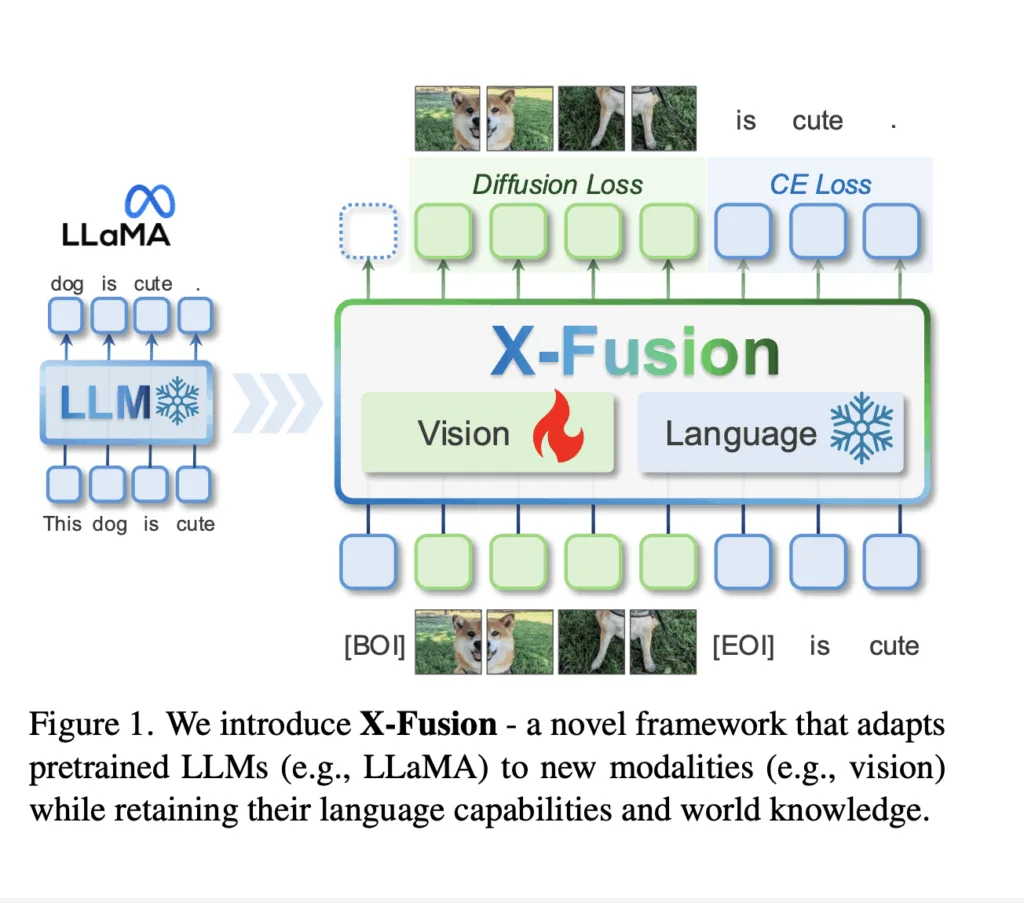

Researchers from UCLA, the University of Wisconsin-Madison, and Adobe Research propose X-Fusion, which adapts pretrained LLMs for multimodal tasks while preserving language capabilities. X-Fusion utilizes a dual-tower architecture, freezing the LLM’s language weights while adding a vision-specific tower to process visual information. The approach aligns text and vision features at multiple levels, improving performance in image-to-text and text-to-image tasks. Through ablation studies, the researchers emphasize the importance of clean image data for training and show that aligning vision features with pre-trained representations accelerates convergence, especially for smaller models.

X-Fusion is a unified framework that adapts pretrained LLMs for vision tasks while retaining their language capabilities. It uses a dual-tower design, freezing the LLM’s text weights while introducing a separate vision tower for processing visual information. Images are tokenized using a pretrained encoder, and image and text tokens are jointly optimized. The model incorporates an optional X-Fuse operation to merge features from both towers for enhanced performance. X-Fusion is trained with autoregressive and image denoising losses, and its performance is evaluated on image generation (text-to-image) and image understanding (image-to-text) tasks.

The study evaluates the Dual Tower architecture against alternative transformer variants for multimodal integration. It compares the Single Tower, Gated Tower, and Dual Projection designs, highlighting the flexibility of the Dual Tower for image and text tasks. The Dual Tower performs best in image generation and understanding, outperforming other designs by 23% in FID without increasing training parameters. The study also investigates the effects of noise and data ratios on performance, finding that clean images improve understanding and generation. Additionally, aligning vision features with a pretrained encoder like CLIP boosts performance, especially for smaller models.

In conclusion, X-Fusion is a framework that adapts pretrained LLMs to multimodal tasks, such as image understanding and generation, while preserving language capabilities. It introduces a Dual Tower architecture where language weights remain fixed, and a separate trainable vision tower processes visual features. Experimental results show that X-Fusion outperforms alternative designs in image and text-to-image tasks. Key findings include the benefits of incorporating understanding-focused data, reducing noise in image data, and the positive impact of feature alignment, especially for smaller models. The research contributes valuable insights into building efficient multimodal models.

Check out the Paper. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.