Memory plays a crucial role in LLM-based AI systems, supporting sustained, coherent interactions over time. While earlier surveys have explored memory about LLMs, they often lack attention to the fundamental operations governing memory functions. Key components like memory storage, retrieval, and memory-grounded generation have been studied in isolation, but a unified framework that systematically integrates these processes remains underdeveloped. Although a few recent efforts have proposed operational views of memory to categorize existing work, the field still lacks cohesive memory architectures that clearly define how these atomic operations interact.

Furthermore, existing surveys tend to address only specific subtopics within the broader memory landscape, such as long-context handling, long-term memory, personalization, or knowledge editing. These fragmented approaches often miss essential operations like indexing and fail to offer comprehensive overviews of memory dynamics. Additionally, most prior work does not establish a clear research scope or provide structured benchmarks and tool coverage, limiting their practical value for guiding future advancements in memory for AI systems.

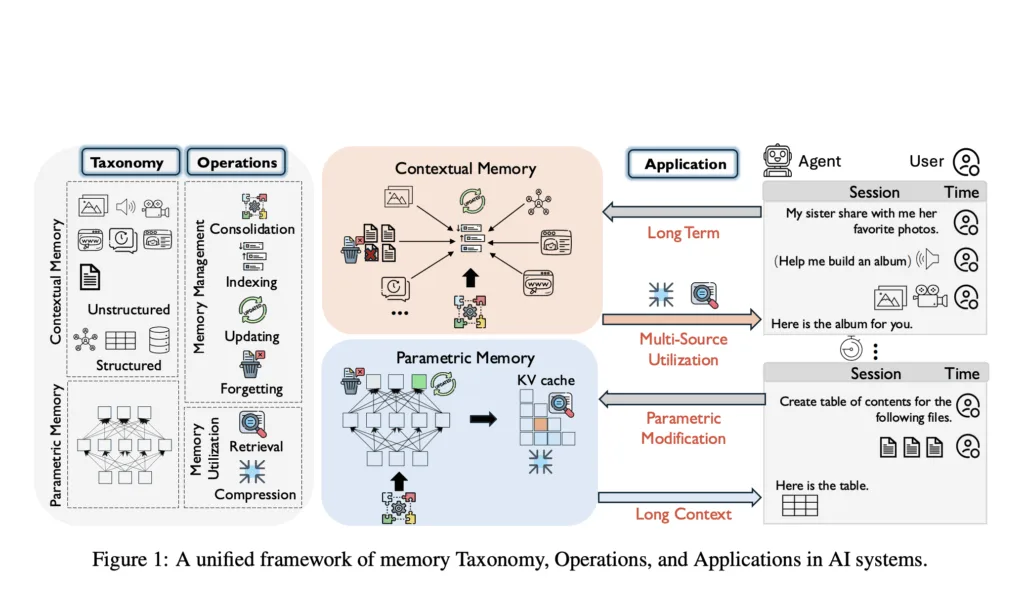

Researchers from the Chinese University, the University of Edinburgh, HKUST, and the Poisson Lab at Huawei UK R&D Ltd. present a detailed survey on memory in AI systems. They classify memory into parametric, contextual-structured, and contextual-unstructured types, distinguishing between short-term and long-term memory inspired by cognitive psychology. Six fundamental operations—consolidation, updating, indexing, forgetting, retrieval, and compression—are defined and mapped to key research areas, including long-term memory, long-context modeling, parametric modification, and multi-source integration. Based on an analysis of over 30,000 papers using the Relative Citation Index, the survey also outlines tools, benchmarks, and future directions.

The researchers first develop a three‐part taxonomy of AI memory—parametric (model weights), contextual‐structured (e.g., indexed dialogue histories), and contextual‐unstructured (raw text or embeddings)—and distinguish short‐ versus long‐term spans. They then define six core memory operations: consolidation (storing new information), updating (modifying existing entries), indexing (organizing for fast access), forgetting (removing stale data), retrieval (fetching relevant content), and compression (distilling memories). To ground this framework, they mined over 30,000 top‐tier AI papers (2022–2025), ranked them by Relative Citation Index, and clustered high‐impact works into four themes—long‐term memory, long‐context modeling, parametric editing, and multi‐source integration—thereby mapping each operation and memory type to active research areas and highlighting key benchmarks and tools.

The study describes a layered ecosystem of memory-centric AI systems that support long-term context management, user modeling, knowledge retention, and adaptive behavior. This ecosystem is structured across four tiers: foundational components (such as vector stores, large language models like Llama and GPT-4, and retrieval mechanisms like FAISS and BM25), frameworks for memory operations (e.g., LangChain and LlamaIndex), memory layer systems for orchestration and persistence (such as Memary and Memobase), and end-user-facing products (including Me. bot and ChatGPT). These tools provide infrastructure for memory integration, enabling capabilities like grounding, similarity search, long-context understanding, and personalized AI interactions.

The survey also discusses open challenges and future research directions in AI memory. It highlights the importance of spatio-temporal memory, which balances historical context with real-time updates for adaptive reasoning. Key challenges include parametric memory retrieval, lifelong learning, and efficient knowledge management across memory types. Additionally, the paper draws inspiration from biological memory models, emphasizing dual-memory architectures and hierarchical memory structures. Future work should focus on unifying memory representations, supporting multi-agent memory systems, and addressing security concerns, particularly memory safety and malicious attacks in machine learning techniques.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit. For Promotion and Partnerships, please talk us.

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.