In this tutorial, we lean hard on Together AI’s growing ecosystem to show how quickly we can turn unstructured text into a question-answering service that cites its sources. We’ll scrape a handful of live web pages, slice them into coherent chunks, and feed those chunks to the togethercomputer/m2-bert-80M-8k-retrieval embedding model. Those vectors land in a FAISS index for millisecond similarity search, after which a lightweight ChatTogether model drafts answers that stay grounded in the retrieved passages. Because Together AI handles embeddings and chat behind a single API key, we avoid juggling multiple providers, quotas, or SDK dialects.

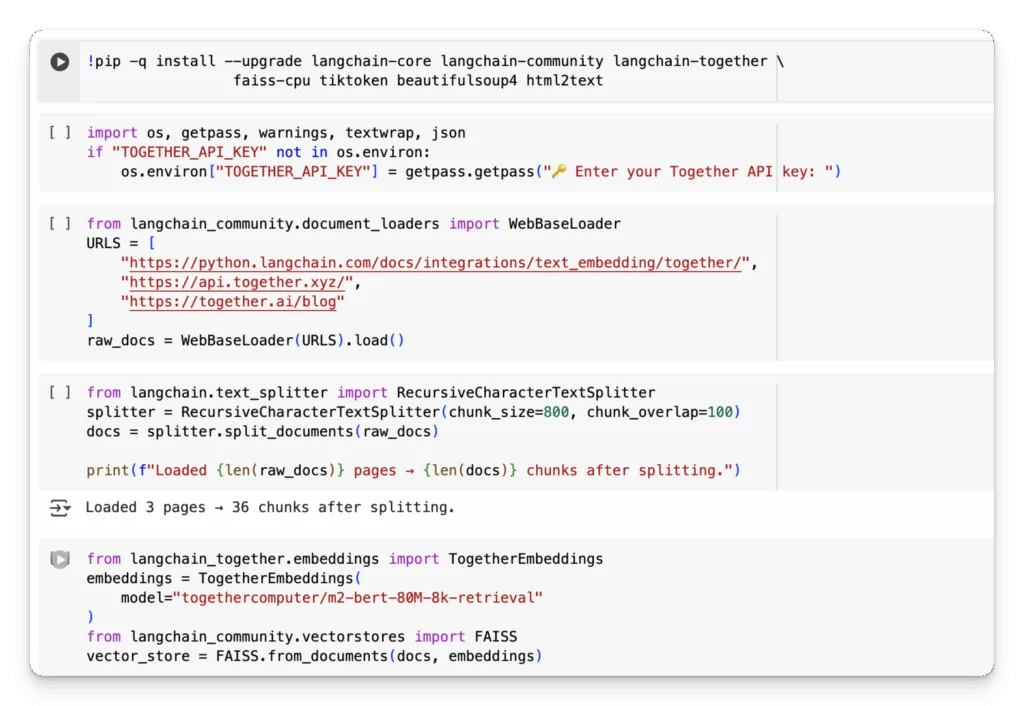

!pip -q install --upgrade langchain-core langchain-community langchain-together

faiss-cpu tiktoken beautifulsoup4 html2text

This quiet (-q) pip command upgrades and installs everything the Colab RAG needs. It pulls core LangChain libraries plus the Together AI integration, FAISS for vector search, token-handling with tiktoken, and lightweight HTML parsing via beautifulsoup4 and html2text, ensuring the notebook runs end-to-end without additional setup.

import os, getpass, warnings, textwrap, json

if "TOGETHER_API_KEY" not in os.environ:

os.environ["TOGETHER_API_KEY"] = getpass.getpass("🔑 Enter your Together API key: ")We check whether the TOGETHER_API_KEY environment variable is already set; if not, it securely prompts us for the key with getpass and stores it in os.environ. The rest of the notebook can call Together AI’s API without hard‑coding secrets or exposing them in plain text by capturing the credentials once per runtime.

from langchain_community.document_loaders import WebBaseLoader

URLS = [

"

"

"

]

raw_docs = WebBaseLoader(URLS).load()

WebBaseLoader fetches each URL, strips boilerplate, and returns LangChain Document objects containing the clean page text plus metadata. By passing a list of Together-related links, we immediately collect live documentation and blog content that will later be chunked and embedded for semantic search.

from langchain.text_splitter import RecursiveCharacterTextSplitter

splitter = RecursiveCharacterTextSplitter(chunk_size=800, chunk_overlap=100)

docs = splitter.split_documents(raw_docs)

print(f"Loaded {len(raw_docs)} pages → {len(docs)} chunks after splitting.")RecursiveCharacterTextSplitter slices every fetched page into ~800-character segments with a 100-character overlap so contextual clues aren’t lost at chunk boundaries. The resulting list docs holds these bite-sized LangChain Document objects, and the printout shows how many chunks were produced from the original pages, essential prep for high-quality embedding.

from langchain_together.embeddings import TogetherEmbeddings

embeddings = TogetherEmbeddings(

model="togethercomputer/m2-bert-80M-8k-retrieval"

)

from langchain_community.vectorstores import FAISS

vector_store = FAISS.from_documents(docs, embeddings)

Here we instantiate Together AI’s 80 M-parameter m2-bert retrieval model as a drop-in LangChain embedder, then feed every text chunk into it while FAISS.from_documents builds an in-memory vector index. The resulting vector store supports millisecond-level cosine searches, turning our scraped pages into a searchable semantic database.

from langchain_together.chat_models import ChatTogether

llm = ChatTogether(

model="mistralai/Mistral-7B-Instruct-v0.3",

temperature=0.2,

max_tokens=512,

)

ChatTogether wraps a chat-tuned model hosted on Together AI, Mistral-7B-Instruct-v0.3 to be used like any other LangChain LLM. A low temperature of 0.2 keeps answers grounded and repeatable, while max_tokens=512 leaves room for detailed, multi-paragraph responses without runaway cost.

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vector_store.as_retriever(search_kwargs={"k": 4}),

return_source_documents=True,

)

RetrievalQA stitches the pieces together: it takes our FAISS retriever (returning the top 4 similar chunks) and feeds those snippets into the llm using the simple “stuff” prompt template. Setting return_source_documents=True means each answer will return with the exact passages it relied on, giving us instant, citation-ready Q-and-A.

QUESTION = "How do I use TogetherEmbeddings inside LangChain, and what model name should I pass?"

result = qa_chain(QUESTION)

print("n🤖 Answer:n", textwrap.fill(result['result'], 100))

print("n📄 Sources:")

for doc in result['source_documents']:

print(" •", doc.metadata['source'])Finally, we send a natural-language query through the qa_chain, which retrieves the four most relevant chunks, feeds them to the ChatTogether model, and returns a concise answer. It then prints the formatted response, followed by a list of source URLs, giving us both the synthesized explanation and transparent citations in one shot.

In conclusion, in roughly fifty lines of code, we built a complete RAG loop powered end-to-end by Together AI: ingest, embed, store, retrieve, and converse. The approach is deliberately modular, swap FAISS for Chroma, trade the 80 M-parameter embedder for Together’s larger multilingual model, or plug in a reranker without touching the rest of the pipeline. What remains constant is the convenience of a unified Together AI backend: fast, affordable embeddings, chat models tuned for instruction following, and a generous free tier that makes experimentation painless. Use this template to bootstrap an internal knowledge assistant, a documentation bot for customers, or a personal research aide.

Check out the Colab Notebook here. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.