LLMs have gained outstanding reasoning capabilities through reinforcement learning (RL) on correctness rewards. Modern RL algorithms for LLMs, including GRPO, VinePPO, and Leave-one-out PPO, have moved away from traditional PPO approaches by eliminating the learned value function network in favor of empirically estimated returns. This reduces computational demands and GPU memory consumption, making RL training more feasible with increasingly large models. However, this efficiency comes with a trade-off – the value function could serve as a powerful outcome verifier to evaluate reasoning chain correctness. Without this component, LLMs lose a valuable verification capability that could enhance inference through parallel search strategies like Best-of-N or weighted majority voting.

Recent advances in LLM reasoning have explored various RL techniques, with traditional PPO algorithms showing the value model’s utility as a test-time search verifier. However, the growing trend toward “value-free” RL methods (GRPO, VinePPO, Leave-one-out PPO) eliminates this capability while requiring separate model training overhead. Test-time verification approaches are alternatives to improve reasoning by scaling computation, including models trained via binary classification, preference learning, or next-token prediction techniques. But these models require large training datasets, additional computational resources, and considerable GPU memory during inference.

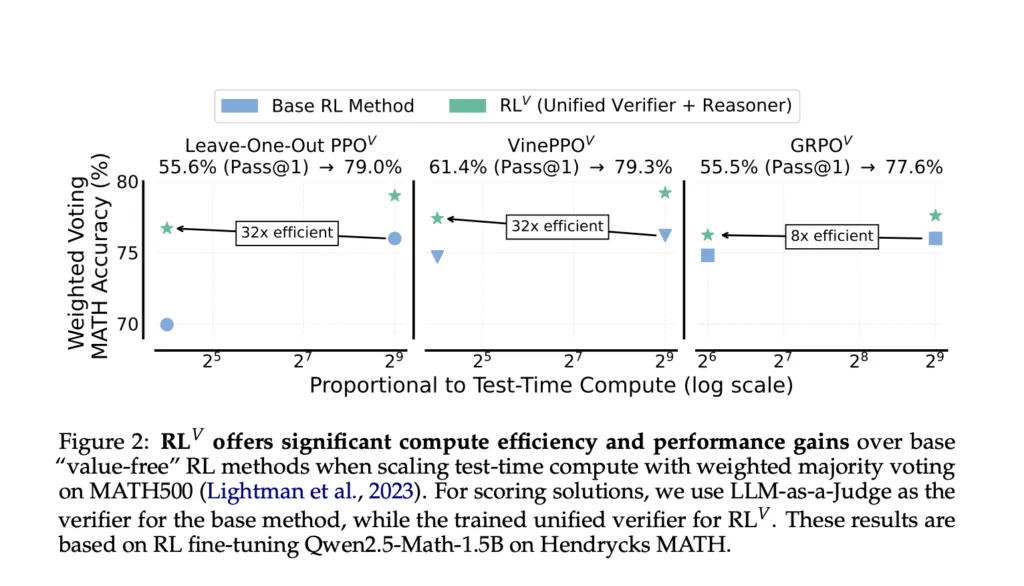

Researchers from McGill University, Université de Montréal, Microsoft Research, and Google DeepMind have proposed RLV to address the potential of value-like signals in RL for LLMs. RLV augments “value-free” methods with a generative verifier without compromising training scalability. RLV utilizes the LLM’s generation capabilities by using the abundant data produced during RL training to optimize the model as both a reasoner and a verifier. This dual-function approach frames verification as a next-token prediction task, enabling the same LLM to generate solutions while providing an intrinsic score. Initial results show RLV boosting MATH accuracy by over 20% compared to base RL methods when using parallel sampling, achieving 8-32 times more efficient test-time compute scaling.

RLV unifies a reasoner and generative verifier within a single LLM, addressing four key research questions about parallel test-time compute scaling, verifier training methodologies, test-time usage strategies, and interactions with sequential scaling in thinking models. The setup uses the Hendycks’ MATH dataset for RL training, running on 4×A100 80G Nvidia GPUs for 3 hours with evaluations reported across MATH500, MATH2, GPQA, and AIME’24 benchmarks. Researchers employ the Qwen2.5 Math 1.5B model, fine-tuning it with GRPO, Leave-One-Out PPO, and VinePPO algorithms with and without unified verification for a shorter CoT experiment. Training utilized a 1024-token context window, with inference generating up to 1024 tokens for MATH500 and 2048 tokens for other test sets.

RLV shows great test-time compute scaling capabilities, achieving up to 32 times greater efficiency and 4% higher accuracy than baseline methods on MATH500 with 512 samples. Testing optimal verification strategies reveals that weighted voting outperforms majority voting and Best-of-N approaches when sampling 8+ solutions per problem for both short and long CoT models. RLV proves complementary to sequential inference compute scaling, with the GRPOV method achieving the highest success rates on AIME 24 at longer generation lengths. Training the unified verifier requires careful balancing through the verification coefficient λ, which presents a significant trade-off in GRPOV implementation – increasing λ improves verifier accuracy (from ~50% to ~80%).

In this paper, researchers introduced RLV, which integrates verification into “value-free” RL frameworks without significant computational overhead and shows improvements in reasoning accuracy, test-time compute efficiency, and cross-domain generalization across MATH, MATH², GPQA, and AIME 24 datasets. Future research directions could explore enhancing the generative verifier to produce explicit CoT explanations, though this advancement would require verification-specific CoT data or dedicated RL training processes. The unified framework for solution generation and verification through RL establishes a valuable foundation for continued advancement in LLM reasoning capabilities.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

Here’s a brief overview of what we’re building at Marktechpost:

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.